This is just what it says*: I intend to post something at least once a month but in lieu of any finished articles, here are various notes I made during May that never got developed into anything more substantial, some of which will probably seem mysterious if read later, since I don’t think I’ll bother to explain the context.

*tragically, that title is a kind of pun though

“Let them eat space travel”

***

Publishing light-hearted articles that debate about whether AI deserves “human rights,” while not covering the erosion of actual human rights because you don’t want to be ‘political’ is political.

***

The pressure to make politicians and news agencies use the word “genocide” to describe the Israeli government’s attacks on Gaza is understandable (because, by the normal definition of the word as it’s used, they are committing genocide: the crime of intentionally destroying part or all of a national, ethnic, racial, or religious group, by killing people or by other methods Cambridge Dictionary) but it’s also kind of self defeating. The focus on the word that represents their actions, rather than the actions themselves creates an instant response which, given the nature of genocide, should be horror, but is more often dismissal; “Genocide? Oh, you’re one of those ‘Free Palestine’ people.” A more important question to ask politicians and the media is possibly, if what is being done to the people of Gaza isn’t genocide, is it therefore somehow okay? Would any non-psychopath presented with an estimated death toll as high as 62,000 Palestinian people since October 7th 2023 think “oh well, it’s a lot but at least it isn’t genocide“? The word is important for moral, legal and factual reasons, but at this point it seems, crazy though it is, more likely to distract from the reality that we are seeing every day, than to really bring it home.

***

Even if the climate emergency is allowed to escalate with no serious attempt to alter it for another decade (which would be, or just as likely, will be, disastrous), it would still be infinitely easier to prevent the Earth from becoming an uninhabitable and Mars-like wasteland than it would to make Mars into a habitable and Earth-like home – especially for any meaningful number of people. Those who are most determined that colonising Mars is a good idea are essentially not serious people, or at least are not serious about the future of mankind. ‘Conquering new worlds’ is just a fun, romantic and escapist idea that appeals more than looking after the world we have; it’s a typical expression of the political right’s obsession with the welfare of imaginary future people as opposed to the welfare of actual human beings who exist.

***

It’s becoming ever more obvious that the UK has a media problem. For a decade now, a particular politician (don’t even want to type his name) with a consistent track record of being unpopular and not winning elections – whose party (or parties) so far haven’t come third or even fourth in a general election – has been foisted on the public to the point where he’s an inescapable presence in British culture. He’s on TV, in newspapers and online on all of the major news outlets, every day, far more than the leader of the official opposition, let alone the leaders of the third and fourth largest parties in the country.

By this point, this obsession has seriously started to shape public discourse. It’s fuelled essentially by fact that the small group of very wealthy people in charge of the traditional media are his peers – they support him and his views because they belong to the same millionaire class and milieu. This was the group that made Brexit happen, portraying it as a movement of ‘the people’ when the real impetus for it was the fact that the EU was closing tax loopholes for the millionaire class.

We are now in the Stalinist phase of Brexit (a funny idea, since its adherents are virulently anti-communist) where the only people who have benefitted from Brexit and continue to benefit from it are that ruling class (who still don’t want to pay taxes). As ‘the people’ inevitably fall out of love with Brexit, since it’s damaged the economy, made foreign holidays more difficult and expensive and basically failed to provide any material gains, let alone a raise in the standard of living in the UK, the Brexit ideology becomes stronger and more corrosive, emotive and unhinged. Basically, the media can’t make people satisfied with having less. but it can try to make them angry, and to direct their anger.

The media’s obsession with the views of the man who has become the figurehead for Brexit distracts from the actual views of the public, but naturally it affects them too. Not surprisingly, constant positive coverage of the man and his colleagues has made he and his party more successful – but, after a decade not much more successful, really, given how inescapable his presence has become. But every little increase in popularity is fed into the circular narrative and framed as an unstoppable rise, as if that rise wasn’t essentially being created by those reporting on it. But really, as with Brexit itself, it’s mostly about money.

All of which raises questions; firstly and most importantly, what can be done about it? The readership of even the most popular newspapers isn’t especially big now, but those newspapers are also major presences on social media and on TV. Most importantly (and quite bizarrely, when you think about it) the major TV broadcasters in the UK still look to newspapers to gauge the political zeitgeist, rather than the other way around (or, rather than both TV and newspapers looking to the internet, which would be more accurate but probably not better). The obvious response is to boycott the newspapers and/or TV, but for as long as Parliament still looks to the press barons to find out the mood of the public, that can only remove the governing of the country even further from the lives and opinions of the people.

A more positive answer would be to promote alternative politics through what media is available; but again that can only work up to a point, because if politicians are still in thrall to the same old newspapers and broadcasters then, again, Parliament becomes even more of a closed-off, cannibalistic circuit, isolated from popular opinion except when a general election comes around.

Complaining to one’s MP is probably the most sensible thing to do, but unless they happen to be one of the five MPs currently representing the media’s chosen party in Parliament, then they almost certainly agree with you and don’t know what to do about it either. And yet surely it can’t be an insurmountable problem?

A less important, but more heart-breaking question is a hypothetical one; what would the country be like now if the media was obsessed with a party with a progressive political agenda (the Green Party for instance, are actually more popular than ever, and despite a few cranks and weirdos, mostly a positive force)? What if, instead of spending a decade spreading intolerance, division, hatred, racism etc so that a few millionaire businessmen could pay less tax, they had been had been pushing ideas of equality and environmentalism into the culture? Money is at the heart of it all really, and this frustrating situation actually led to me taking the unusual (for me) and pointless step of writing an email to the Prime Minister that he will never have read, part of which said;

Surely one of the most effective way to neutralise the poisonous rhetoric of the far right is not to pursue its populist talking points, but to materially improve the lives of the people of the UK? In the last general election – less than a year ago – a vote for Labour was for most people a vote for change, not for more of the same. If Britain wanted divisive rhetoric, attacking migrants and minorities, there were far more obvious people to vote for.

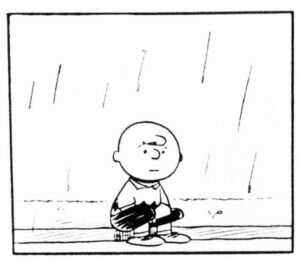

So anyway; hopefully June is better.